CNAS Undergraduate Research Symposium at MSU

After learning the fundamentals of deep learning and computer vision, I began my journey into implementing a real-time system to control a drone using hand gesture detection.

Research Project

Abstract

In this research, we established an approach for human-drone interaction through real-time hand gesture recognition using computer vision and deep learning techniques. Our system aims to provide an intuitive method of controlling UAVs, enhancing their accessibility for various applications such as search and rescue, aerial photography, and surveillance.

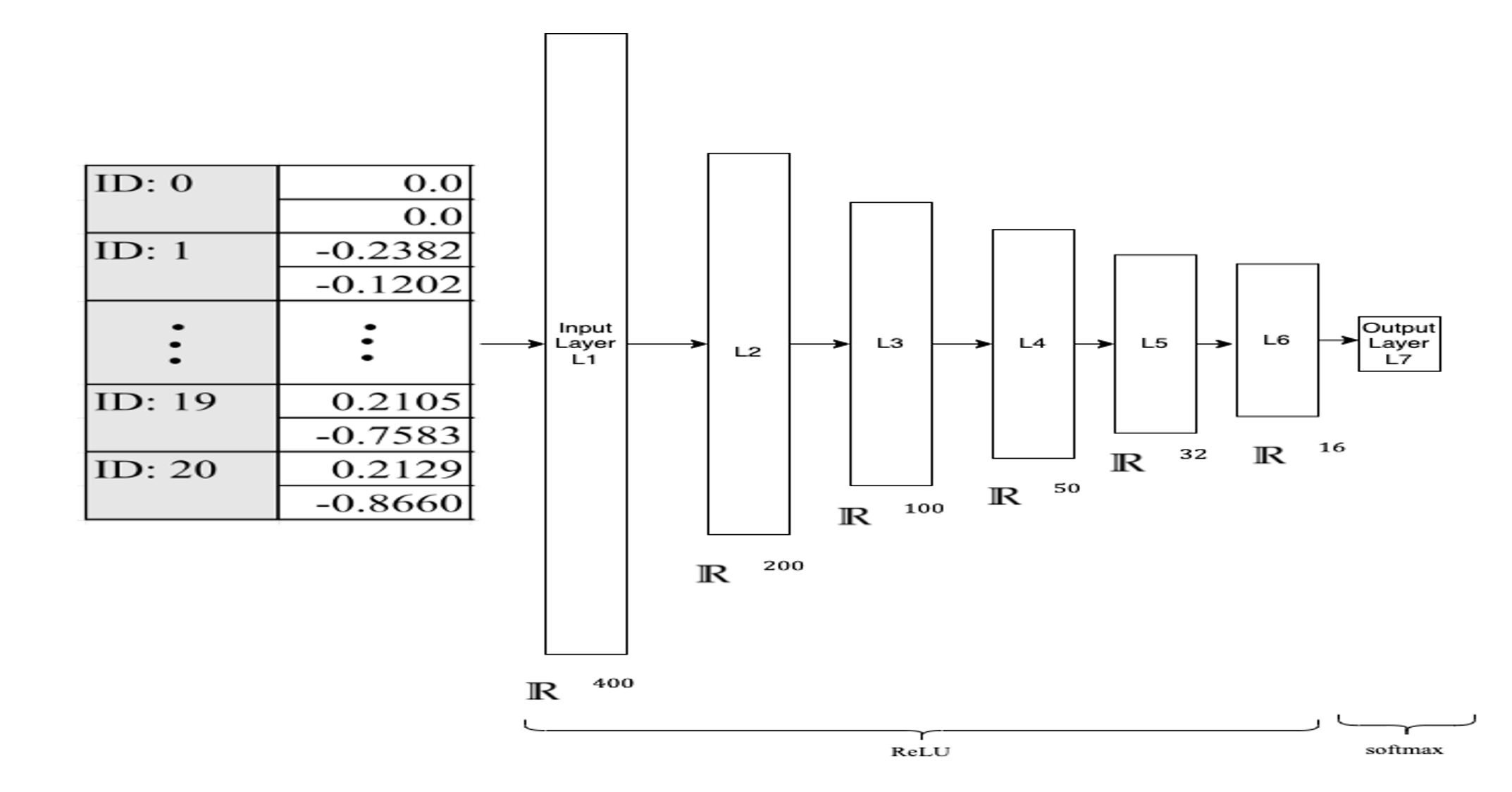

The system employs a Neural Network (NN) model to classify hand gestures captured by a camera mounted on the drone. The model is trained on a dataset of hand keypoints extracted using Google’s MediaPipe. The keypoints are pre-processed to obtain relative coordinates and normalized to create a robust representation of the gestures. We implemented nine distinct hand gestures for drone control, including takeoff, landing, ascend, descend, flip left, flip right, move left, and move right. The system demonstrates real-time performance and is capable of recognizing gestures with 96.6% accuracy. Our research demonstrates the potential of using hand gesture recognition for intuitive human-drone interaction, opening new directions for drone applications and enhancing user experience.

Methodology

- Data collection

- We collected a diverse dataset of gestures using Google’s MediaPipe framework from video frames captured under various conditions to train our machine learning model.

-

Feature Extraction

- The x and y-axis coordinates of each keypoint were extracted and converted to relative coordinates, then normalized to make the model scale, translation, and rotation invariant.

- Model Development

Demo Presentation

You can read more about the other cool research projects that other students have made on the following official website:

Enjoy Reading This Article?

Here are some more articles you might like to read next: